Broadband Network

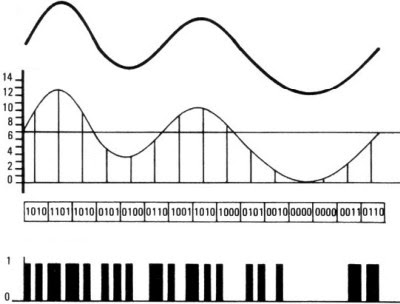

In general, broadband refers to telecommunication in which a wide band of frequencies is available to transmit information. Because a wide band of frequencies is available, information can be multiplexed and sent on many different frequencies or channels within the band concurrently, allowing more information to be transmitted in a given amount of time (much as more lanes on a highway allow more cars to travel on it at the same time). Related terms are wideband (a synonym), baseband (a one-channel band), and narrowband (sometimes meaning just wide enough to carry voice, or simply "not broadband," and sometimes meaning specifically between 50 cps and 64 Kpbs).

Various definers of broadband have assigned a minimum data rate to the term. Here are a few:

• Newton's Telecom Dictionary: "...greater than a voice grade line of 3 KHz...some say [it should be at least] 20 KHz."

• Jupiter Communications: at least 256 Kbps.

• IBM Dictionary of Computing: A broadband channel is "6 MHz wide."

It is generally agreed that Digital Subscriber Line (DSL) and cable TV are broadband services in the downstream direction.

Broadband Traffic

Types of traffic carried by the network

Modern networks have to carry integrated traffic consisting of voice, video and data. The Broadband Integrated Services Digital Network (B-ISDN) satisfies these needs . The types of traffic supported by a broadband network can be classified according to three characteristics • Bandwidth is the amount of network capacity required to support a connection.

• Latency is the amount of delay associated with a connection. Requesting low latency in the Quality of Service (QoS) profile means that the cells need to travel quickly from one point in the network to another.

• Cell-delay variation (CDV) is the range of delays experienced by each group of associated cells. Low cell-delay variation means a group of cells must travel through the network without getting too far apart from one another.

Modern communication services

Society is becoming more informationally and visually oriented. Personal computing facilitates easy access, manipulation, storage, and exchange of information, and these processes require reliable data transmission. The means or media for communicating data are becoming more diverse. Communicating documents by images and the use of high-resolution graphics terminals provide a more natural and informative mode of human interaction than do voice and data alone. Video teleconferencing enhances group interaction at a distance. High-definition entertainment video improves the quality of pictures, but requires much higher transmission rates.These new data transmission requirements may require new transmission means other than the present overcrowded radio spectrum . A modern telecommunications network (such as the broadband network) must provide all these different services (multi-services) to the user.

Differences between traditional (telephony) and modern communication services

Conventional telephony communicates using:

• the voice medium only,

• connects only two telephones per call, and

• uses circuits of fixed bit-rates.

In contrast, modern communication services depart from the conventional telephony service in these three essential aspects. Modern communication services can be:

• multi-media,

• multi-point, and

• multi-rate.

These aspects are examined individually in the following three sub-sections

Multi-media

A multi-media call may communicate audio, data, still images, or full-motion video, or any combination of these media. Each medium has different demands for communication quality, such as:

• bandwidth requirement,

• signal latency within the network, and

• signal fidelity upon delivery by the network.

The information content of each medium may affect the information generated by other media. For example, voice could be transcribed into data via voice recognition, and data commands may control the way voice and video are presented. These interactions most often occur at the communication terminals, but may also occur within the network .

Multi-point

A few examples will be used to contrast point-to-point communications with multi-point communications. Traditional voice calls are predominantly two party calls, requiring a point-to-point connection using only the voice medium. To access pictorial information in a remote database would require a point-to-point connection that sends low bit-rate queries to the database and high bit-rate video from the database. Entertainment video applications are largely point-to-multi-point connections, requiring one-way communication of full motion video and audio from the program source to the viewers. Video teleconferencing involves connections among many parties, communicating voice, video, as well as data. Offering future services thus requires flexible management of the connection and media requests of a multi-point, multi-media communication call [3][4].

Multi-rate

A multi-rate service network is one which flexibly allocates transmission capacity to connections. A multi-media network has to support a broad range of bit-rates demanded by connections, not only because there are many communication media, but also because a communication medium may be encoded by algorithms with different bit-rates. For example, audio signals can be encoded with bit-rates ranging from less than 1 kbit/s to hundreds of kbit/s, using different encoding algorithms with a wide range of complexity and quality of audio reproduction. Similarly, full motion video signals may be encoded with bit-rates ranging from less than 1 Mbit/s to hundreds of Mbit/s. Thus a network transporting both video and audio signals may have to integrate traffic with a very broad range of bit-rates

Wireless Broadband Networks

What is a Wireless Broadband Network?

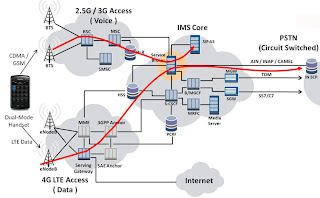

Wireless broadband terminology should not be confused with the generic term “broadband networking” or BISDN (Broadband Integrated Services Digital Network), which refers to various network technologies (fiber or optical) implemented by ISPs and NSPs to achieve transmission speeds higher than 155 Mbps for the Internet backbone. In a lay-person’s terms, BISDN is the wire and cable that run through walls, under floors, from pole to telephone pole, and beneath feet on a city street. BISDN is a concept and a set of services and developing standards for integrating digital transmission services in a broadband network of fiber optic and radio media. BISDN encompasses frame relay service for high-speed data that can be sent in large bursts, the Fiber Distributed-Data Interface (FDDI), and the Synchronous Optical Network (SONET). BISDN supports transmission from 2 Mbps to much higher transfer rates.

Wireless broadband, on the other hand, refers to the wireless network technology that addresses the “last mile” problem whereby we can connect isolated customer premises to an ISP or carrier’s backbone network without leasing traditional T-1 and higher speed copper or fiber channels from your local telecommunication service provider. Wireless broadband refers to fixed wireless connectivity that can be utilized by enterprises, businesses, households and telecommuters who travel from one fixed location to another fixed location. In its current implementation, it does not address the needs of “mobile users” on the road.

Technologically, wireless broadband is an extension of the point-to-point, wireless-LAN bridging concept to deliver high-speed and high capacity pipe that can be used for voice, multi-media and Internet access services. While in simple implementations, primary use of wireless broadband is for connecting LANs to the Internet, in more sophisticated implementations, you may connect multiple services (data, voice, video) over the same pipe. The latter requires multiplexing equipment at customer premises or in a central hub.

From an implementation perspective Wireless Broadband circumvents physical telecommunications networks; it is as feasible in rural as it is in urban areas. For topographies that haven’t yet technically evolved to cable and copper wire infrastructures, vendor solutions circumventing costly installation, maintenance and upgrades, means skipping 120 years of telecommunications evolution. In other areas, deregulation is making the licensing process for Wireless Service Providers (WSPs) hassle free.

Wireless broadband is faster to market, and subscribers are added incrementally, bypassing those installations that are required before wired subscribers can connect.

What Wireless Broadband is Not?

Wireless broadband is for fixed wireless connection - it does not address the mobility needs which at present only 2.5 G and 3G networks intend to provide. In future, there is a technical possibility that broadband wireless radios can be miniaturized and installed in handheld devices. Then they might be able to augment 3G in mobile applications However, it is only a possibility of the physics and electronics - none of the vendors have any prototype products in this area. Of course, wireless broadband is expected to meet the needs of residential connections to the Internet bypassing local telcos.

Four technologies for faster broadband in 2011

10G GPON

The use of PON (passive optical network) technology in fixed broadband networks has grown in popularity in the last couple of years, thanks to lower costs compared to using an optical fiber for each household. The technology calls for several households to share the same capacity, which is sent over a single optical fiber.

Today's systems have an aggregate download capacity of 2.5G bps (bits per second). The move to 10G GPON increases that by a factor of four, hence the name. The technology is also capable of an upstream capacity of 10G bps, which is eight times faster than current networks, according to Verizon Communications.

The increased capacity can either be used to handle more users or increase the bandwidth.

In December 2009, Verizon announced that it had conducted the first field-test for the technology. Since then, a number of operators have conducted tests, including France Telecom, Telecom Italia, Telefonica, Portugal Telecom, China Mobile and China Unicom, according to Huawei.

The first commercial services based on 10G GPON are expected in the second half 2011, according to Alcatel-Lucent. Pioneering operator Verizon hasn't announced any commercial plans yet, according to a spokesman.

Besides broadband, the technology is also being pitched for mobile backhaul use.

VDSL2

The DSL family of technologies still dominates the fixed broadband world. To ensure that operators can continue to use their copper networks, network equipment vendors are adding some new technologies to VDSL2 to increase download speeds to several hundred megabits per second

To boost DSL to those kinds of speeds, the vendors are using a number of technologies. One way is to send traffic over several copper pairs at the same time, compared to traditional DSL, which only uses one copper pair. This method then uses a technology -- called DSL Phantom Mode by Alcatel-Lucent and Phantom DSL by Nokia Siemens -- that can create a third virtual copper pair that sends data over a combination of two physical pairs.

However, the use of these technologies also creates crosstalk, a form of noise that degrades signal quality and decreases bandwidth. To counteract that, vendors are using a noise-canceling technology called vectoring. It works the same way as noise-canceling headphones, continuously analyzing the noise conditions on the copper cables, and then creates a new signal to cancel it out, according to Alcatel-Lucent.

Products are now entering field trials and the first commercial services are expected to be launched in 2011. Just like 10G GPON, it is also being pitched as an alternative for mobile backhaul.

LTE

The rollout of LTE (Long Term Evolution) is now under way in Europe, Asia and the U.S., and by the end of 2011 about 50 LTE commercial networks will have launched, according to an October report from the Global Mobile Suppliers Association (GSA) detailing current operator launch plans.

The first round of LTE services, with the exception of MetroPCS and its Samsung Craft phone, connects users with USB modems. That will change in 2011 with the arrival of LTE capable smartphones and tablets. Verizon Wireless expects such handsets will be available by mid-2011, according to a statement.

The bandwidth and coverage operators can offer depends on their spectrum holdings.

LTE isn't just about offering higher speeds to metropolitan areas. In Germany, the government has mandated that the mobile operators first use the technology to offer broadband to rural areas.

Besides higher speeds LTE also offers lower latencies, which will help the performance of real-time applications sensitive to delays, including VoIP (Voice over Internet Protocol) video streaming, video conferencing and gaming, to perform better.

The rollout of isn't going to happen overnight, even for those operators that have are now launching services. By 2013, Verizon plans to cover its entire 3G network with LTE. Telenor in Sweden also plans to have its network upgrade complete by 2013, according to a statement.

HSPA+

LTE may be getting most of the attention, but 2010 has been a banner year for HSPA+ (High-Speed Packet Access). Migration to HSPA+ has been a major trend this year and more than one in five HSPA operators have commercially launched HSPA+ networks, according to the GSA.

However, today's download speeds of up to 21Mbps is far from the end of the line for HSPA+. Nine operators -- including Bell Mobility in Canada and Telstra in Australia -- have already launched services at 42Mbps. The average real-world download speed is 7Mbps to 14Mbps, according to Bell.

To get to that speed, operators use a technology called DC-HSPA+ (Dual-Channel High-Speed Packet Access), which sends data using two channels at the same time.

More than 30 DC-HSPA+ (42Mbps) network deployments are on-going or committed to, including T-Mobile in the U.S. It will launch services next year, but isn't ready give any additional details on timing, according to a spokeswoman.

Also, five operators have already committed to 84Mbps, which is the next evolution step for their HSPA+ networks, the first of which is also expected to arrive next year.

IP Broadband Network Management Ericsson's

Ericsson's IP Broadband Network Management portfolio is a cutting-edge network management system solution for IP and broadband networks that contributes to improved service delivery and performance while lowering costs. It includes Ericsson IP Transport NMS, ServiceOn family and NetOp EMS.

Managing the Broadband Revolution

IP Broadband Network Management portfolio manages the complete range of technologies used for the deployment of Ericsson broadband network systems. Managed components include copper, fiber and radio-based transmission systems for both narrowband and broadband data. Managed transport technologies include IP, MPLS-TP, MPLS, Ethernet, PDH, SDH, ODU, ATM, DWDM, CWDM and xDSL.

Multi-layer management

Service, network and element management layers can be seamlessly integrated into a single solution, based on a selection of integrated products from the IP Broadband Network Management portfolio. Key features include administration of customers and services, facilities management, routing of network trails, network map presentation with fault and performance monitoring, equipment and inventory management.

Distributed, scalable and resilient architecture

IP Broadband Network Management can be tailored to the operator's requirements according to functional, organizational, security or geographical criteria. The solution is scalable and can easily grow with the managed network.

Open interfaces for integration

IP Broadband Network Management includes an open integration framework enabling it to serve as a higher-order management system for subordinate managers or network elements. The integration framework supports a number of network management protocols including SNMP, CLI, XML and Corba. A range of strategic 3rd party network element integrations are already supported as ready-to-manage solutions.

IP Broadband Network Management supports the international TeleManagement Forum (TMF) standard to facilitate platform-independent access to system resources from a variety of distributed client applications. This architecture is used to realize a range of northbound and external interfaces including a web-based customer care management application.

Field-proven expertise

Ericsson's IP Broadband Network Management portfolio has been developed using Ericsson field proven worldwide expertise in telecom networks, delivering cost savings to the operators and new capabilities to the IP Broadband network portfolio. The solution exploits completely the characteristics of each network technology, delivers advance functionality like traffic restoration or alarm correlation, and support operators' way of working and procedures.

User-friendly operation

With IP Broadband Network Management the emphasis is on user-friendliness and consistency of presentation to increase operator efficiency and effectiveness, also to minimize training overheads. Network Management is performed by a graphical user interface specially designed to leverage the commonality between different technologies and tasks. The user can move between managed technologies and management layers with ease.

As the IP Broadband Network Management portfolio is inherently modular it is possible to deliver the following levels of network management for all the broadband networks elements:

• Element management solutions, providing centralized, feature-rich maintenance support platform

• Network management solutions, allowing end-to-end provisioning, fault and performance analysis across the entire domain of broadband networks

• Cross-domain management solutions, supporting multi-technology and multi-vendor networks

• Integration capabilities, providing seamless and easy integration of IP Broadband Network Management with customers' existing systems

• Service management solutions, delivering integrated service management across multiple domains

IP Broadband Network Managementfor broadband networks is also open to adaptation and customization to serve any special needs of operators worldwide.

• Over 650 customers worldwide

• Covering all broadband network technologies, including optical and microwave transport and broadband access

• Carrier class, Tier-1 approved with High Availability options

• Scalable to meet Tier 1, Tier 2, new operators and private network requirements

• Unique management features

• Integrated with Ericsson OSS's

Public Safety Broadband Network

ObjectiveTo develop communications and network models for public safety broadband networks, and to analyze and optimize the performance of these networks.

Background

The FCC and the Administration have called for the deployment of a nationwide, interoperable public safety mobile broadband network. Public safety has been allocated broadband spectrum in the 700 MHz band for such a network. The National Public-Safety Telecommunications Council and the FCC have endorsed the 3GPP Long Term Evolution (LTE) standard as the technology of choice for this network.

Network Analysis and Optimization

The Emerging & Mobile Network Technologies Group (EMNTG) performs RF network analysis and optimization of public safety broadband networks using commercially available and in-house customized network modeling and simulation tools. Current efforts are focused on wide area networks based on LTE techology.

Network analyses can be used to predict wide area performance metrics over a defined geographic area, such as the uplink and downlink:

• Signal-to-interference-and-noise ratio,

• Coverage probability, and

• Achievable data rate.

The tools can also be used to optimize network configurations (e.g., sector antenna directions, transmission powers) for various optimization criteria (e.g., maximum coverage area).

Protocol Modeling and Simulation

• Achieved throughput

• Communication delay

• Packet loss rate

• Network utilization

Protocol models complement the wide area network analysis and optimization tools described above to provide a more complete picture of the expected public safety communication performance. Using the cell sizes, antenna configurations, and transmission powers obtained from a network optimization, the protocol-level analysis generates realistic sector loads which can be fed back to the network analysis and optimization tools for further refinement of the optimized configuration.

Channel Measurements

The fidelity of the predictions generated by the network and protocol models is dependent in large part on the accuracy of the underlying RF channel propagation model. In collaboration with its partners in NIST/PML, EMNTG collects and analyzes channel measurements that are used to develop and tune RF propagation models. Of particular relevance to the Public Safety Broadband Network, these efforts include measurements in the 700 MHz public safety band.

Multiservice broadband network technology

Multiservice broadband networks are on the verge of becoming a reality. The key drivers are rapid advances in technologies; privatization and competition; the convergence of the telecommunication, data communication, entertainment, and publishing industries; and changes in consumers' life-styles.Wide-area networks today consist of separate networks for voice, private lines, and data services, supported by a common facility infrastructure. Similar separation between voice and data networks exists on enterprise premises (Fig. 1). Residential users typically use copper loop to access voice networks as well as data networks (using voice-band modems).

Fig. 1 Current network architecture.

Public voice and private line networks have been designed for very low latency and with an unrelenting attention to reliability and quality of service. Data networks (especially the public Internet) introduce longer and less predictable delays and are not suitable for highly interactive communication. For the most part, the Internet has not yet been shown to be reliable enough for mission-critical functions.The time is ripe for these networks to change in fundamental ways. There is a demand for services involving multiple media, increasing intelligence, diverse speeds, and varied quality-of-service requirements. Increasing dependence on network services requires all forms of networking to be as reliable as today's voice network. The revolution taking place in electronics and photonics will provide ample opportunities to satisfy these requirements.

Technological advances

The storage capacity of a single dynamic read-only-memory (DRAM) chip increased from 64 kilobits in 1970 to 256 megabits in 1998, and 4-gigabit chips are possible in research laboratories (Fig. 2 a). Development of multistate transistors and new lithographic techniques (enabling the fabrication of atomic-scale transistors) promise the continuation of this trend. The computing power in a single microprocessor chip increased from 1 million instructions per second (MIPS) in 1981 to about 400 MIPS in 1998, and no limit is in sight (Fig. 2 b). These advances are being translated into explosive growth in switching and routing capacities, information processing capacities, database sizes, and data retrieval speeds. Atomic-scale transistors also promise “system on a chip,” resulting in inexpensive wearable computers, smart appliances, and wireless devices. They also promise less power consumption and longer battery life.

Fig. 2 Progress in semiconductor technologies. (a) Density of dynamic random-access memories (DRAMs). Lengths (in micrometers) are minimum internal spacings of chip components. Advances in manufacturing techniques allow narrower spacing, making

possible higher density and hence larger storage capacity. (b) Microprocessor speeds.

The advances in photonics are even more remarkable. The capacity of a single optical fiber increased from 45 megabits per second in 1981 to 1.7 gigabits per second in 1990 (Fig. 3). A major change occurred with the advent of dense wavelength-division multiplexing (DWDM) and optical amplifiers. Dense wavelength-division multiplexing allows the transport of many colors (wavelengths) of light in one fiber, while optical amplifiers amplify all wavelengths in a fiber simultaneously. These two innovations have made it possible to carry 400 gigabits per second (40 wavelengths each carrying 10 gigabits per second) on a single fiber and 1600 Gbps systems are on the horizon. Experimental work in research laboratories is pushing this capacity to over 3 terabits per second. Recent innovations have made it possible to put 432 fibers in a single cable. One such cable will be able to transport the daily volume of current worldwide traffic in 60 seconds. Many new and existing operators of wide-area networks have already started capitalizing on this growth in transport capacity. Transport capacity in wide-area networks around the world is expected to increase by a factor of up to 1000 by 2005.

Fig. 3 Capacity growth in long-haul optical fibers. Evolution is plotted in both the total capacity and the number of wavelengths employed.

Advances in electronics and photonics will also permit tremendous increases in access speeds, releasing the residential and small business users from the current speed restriction of the “last mile” on the copper loop that links the user to the network. New digital subscriber loop (DSL) technologies use advanced coding and modulation techniques to carry from several hundred kilobits per second to 50 megabits per second on the copper loop. Hybrid fiber-coaxial (HFC) technologies allow the use of cable television channels to provide several megabits per second upstream and up to 40 megabits per second downstream for a group of about 500 homes. Similar access speeds may also be provided by hybrid fiber-wireless technologies. Fiber-to-the-curb (FTTC) and fiber-to-the-home (FTTH) technologies provide up to gigabits per second to single homes by bringing fiber closer to the end users. Passive optical networks (PONs) will allow a single fiber from the central hub to serve several hundred homes by providing one or more wavelengths to each home using wavelength splitting techniques.

Advances in network infrastructure

Innovations in software technologies, network architectures, and protocols will harness this increase in networking capacities to realize true multiservice networks.

Narrowband voice [analog, ISDN (Integrated Services Digital Network), and cellular] and Internet Protocol (IP)–based data end systems are likely to continue operating well into the future, as are the Ethernet-based local-area networks. Most of the present growth is in IP-based end systems and cellular telephones, although many parts of the world are still experiencing enormous growth in analog and ISDN telephones. New services are being developed for the IP-based end systems (such as multimedia collaboration, multimedia call centers, distance learning, networked home appliances, directory assisted networking, on-line language translation, and telemedicine). These services will coexist with the traditional voice and data services. See also: Distance education

One challenge is to find the right network architecture for transporting efficiently the traffic generated by current and new services. An approach involving selective layered bandwidth management (SLBM) provides efficiency, robustness, and evolvability. SLBM uses all or some of the IP, Asynchronous Transfer Mode (ATM), SONET/SDH (synchronized optical network/synchronized digital hierarchy), and optical networking layers, depending on the traffic mix and volume (Fig. 4).

Fig. 4 Protocol layering and evolution. Heavy arrows represent new and future layering. PPP is Point-to-Point protocol; HDLC, High-speed Data-Link Control (used here only for

Fig. 4 Protocol layering and evolution. Heavy arrows represent new and future layering. PPP is Point-to-Point protocol; HDLC, High-speed Data-Link Control (used here only for the purpose of delineating the packets); SDL, Simplified Data-Link protocol; HSSF, High-Speed Synchronous Framing protocol; Cell PL, (ATM) Cell-based Physical Layer.

For the near future, ATM provides the best technology for a true multiservice backbone due to its extensive quality-of-service and traffic management features such as admission controls, traffic policing, service scheduling, and buffer management. An ATM backbone network can support the traditional voice traffic, new packet voice traffic, various types of video traffic, Eithernet traffic, and IP traffic. The ATM Adaptation Layer protocols AAL1, AAL2, and AAL5 facilitate this integration. ATM also enables creation of link-layer (layer 2) virtual private networks (VPNs) over a shared public network infrastructure while providing secure communication and performance guarantees.

Traditional voice, IP, and ATM traffic will be transported over the circuits provided by the SONET/SDH network layer over the optical (wavelength) layer. SONET/SDH networks allow partitioning of the wavelength capacity into lower-granularity circuits which can be used by this traffic for efficient networking. The SONET/SDH layer also provides extensive performance monitoring. Finally, SONET/SDH networks, formed using ring topology, allow simple and fast (several tens of milliseconds) rerouting around a failure in a link or a node. This fast restoration of service makes such failures transparent to the service layers. The optical layer will consist of point-to-point fiber links over which many wavelengths will be multiplexed to allow very efficient use of the fiber capacity. Many public carriers and enterprise networks will use this multilayered networking. However, all these situations will change over time.

Rapid growth of IP end systems and high access speeds to the wide-area networks will create high point-to-point IP demands in the core backbone. At this level of traffic, the finer bandwidth partitioning provided by the ATM layer may not compensate for the additional protocol and equipment overhead. Thus, an increasingly higher portion of IP traffic will be carried directly over SONET/SDH circuits. At even higher traffic levels, even the partitioning provided by the SONET/SDH layer will be unnecessary. Meanwhile, optical cross connects and add-drop multiplexers will allow true optical-layer networking using ring or mesh topology. New techniques and algorithms being developed for very fast restoration at the optical layer will further reduce the need for the SONET/SDH layer. IP-over-wavelength networking is then expected to develop.

Of course, this simplification in the network infrastructure requires IP- and Ethernet-based networks to multiplex voice, data, video, and multimedia services directly over the SONET/SDH or dense wavelength-division multiplexing layer. Ethernet and IP protocols are being enhanced to allow such multiplexing.

New high-capacity local-area network switches (layer 2/3 switches) provide fast (10–1000 megabits per second) interfaces and large switching capacities. They also eliminate the need for contention-based access over shared-media local-area networks. Protocol standards (802.1p and 802.1q) are being defined to provide multiple classes of service over such switches by providing different delay and loss controls. Similarly, router technology and Internet protocols are beginning to eliminate the bottlenecks caused by software-based forwarding, rigid routing, and undifferentiated packet processing. New IP switches (layer 3 switches) use hardware-based packet forwarding in each input port, permitting very high capacities (60–1000 gigabits per second, or 50–1000 million packets per second). Protocols are being defined to use the Differential Service (DS) field in the header of each IP packet to signal the quality-of-service requirement of the application being supported by that packet. Intelligent buffering and packet scheduling algorithms in IP switches can use this field to provide differential quality of service as measured by delay, jitter, and losses. At the interface between layer 2 and layer 3 switches, conversion between 802.1p- and DS-based signaling can provide seamless quality-of-service management within enterprise and carrier networks. Resource reservation protocols are being defined to allow the application of signal resource requirements to the network. Traffic-policing algorithms will guarantee that the traffic entering the network is consistent with the resources reserved by the reservation protocols. Resource reservation protocols and traffic policing will further help quality-of-service management. Two other factors will add to the quality-of-service capability of IP networks. In particular, hierarchical classification of IP traffic using additional fields in the header will allow bandwidth guarantees and delay control for individual or aggregated flows based on the users as well as applications. Also, ongoing work on Multi Protocol Label Switching (MPLS) will allow flexible routing and traffic engineering in IP networks.

With these capabilities, public carriers can offer IP-layer (layer 3) virtual private networks to many enterprise customers over a shared infrastructure. Carrier-based policy servers, service and resource provisioning servers, and directories will interact with enterprise policy servers to map the enterprise requirements into carrier actions consistent with the overall virtual private network contract.

While backbone networks are likely to become more uniform, many different access technologies (such as copper loop, wireless, fiber, coaxial cable, fiber-cable hybrid, and satellite) will continue to play major roles in future networks. Standards are being defined for each of the access technologies to support diverse quality-of-service requirements over one interface. Thus, a true end-to-end solution is expected for multiservice broadband networking.

See also: Data communications; Integrated circuits; Integrated services digital network (ISDN); Local-area networks; Microprocessor; Optical communications; Packet switching; Semiconductor memories; Telephone service; Wide-area networks

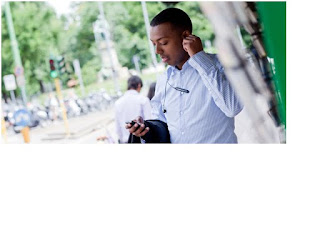

New technology uses human body for broadband networking

Your body could soon be the backbone of a broadband personal data network linking your mobile phone or MP3 player to a cordless headset, your digital camera to a PC or printer, and all the gadgets you carry around to each other.

These personal area networks are already possible using radio-based technologies, such as Wi-Fi or Bluetooth, or just plain old cables to connect devices. But NTT, the Japanese communications company, has developed a technology called RedTacton, which it claims can send data over the surface of the skin at speeds of up to 2Mbps -- equivalent to a fast broadband data connection.

Using RedTacton-enabled devices, music from an MP3 player in your pocket would pass through your clothing and shoot over your body to headphones in your ears. Instead of fiddling around with a cable to connect your digital camera to your computer, you could transfer pictures just by touching the PC while the camera is around your neck. And since data can pass from one body to another, you could also exchange electronic business cards by shaking hands, trade music files by dancing cheek to cheek, or swap phone numbers just by kissing.

NTT is not the first company to use the human body as a conduit for data: IBM pioneered the field in 1996 with a system that could transfer small amounts of data at very low speeds, and last June, Microsoft was granted a patent for "a method and apparatus for transmitting power and data using the human body."

But RedTacton is arguably the first practical system because, unlike IBM's or Microsoft's, it doesn't need transmitters to be in direct contact with the skin -- they can be built into gadgets, carried in pockets or bags, and will work within about 20cm of your body. RedTacton doesn't introduce an electric current into the body -- instead, it makes use of the minute electric field that occurs naturally on the surface of every human body. A transmitter attached to a device, such as an MP3 player, uses this field to send data by modulating the field minutely in the same way that a radio carrier wave is modulated to carry information.

Receiving data is more complicated because the strength of the electric field involved is so low. RedTacton gets around this using a technique called electric field photonics: A laser is passed though an electro-optic crystal, which deflects light differently according to the strength of the field across it. These deflections are measured and converted back into electrical signals to retrieve the transmitted data.

An obvious question, however, is why anyone would bother networking though their body when proven radio-based personal area networking technologies, such as Bluetooth, already exist? Tom Zimmerman, the inventor of the original IBM system, says body-based networking is more secure than broadcast systems, such as Bluetooth, which have a range of about 10m.

"With Bluetooth, it is difficult to rein in the signal and restrict it to the device you are trying to connect to," says Zimmerman. "You usually want to communicate with one particular thing, but in a busy place there could be hundreds of Bluetooth devices within range."

As human beings are ineffective aerials, it is very hard to pick up stray electronic signals radiating from the body, he says. "This is good for security because even if you encrypt data it is still possible that it could be decoded, but if you can't pick it up it can't be cracked."

Zimmerman also believes that, unlike infrared or Bluetooth phones and PDAs, which enable people to "beam" electronic business cards across a room without ever formally meeting, body-based networking allows for more natural interchanges of information between humans.

"If you are very close or touching someone, you are either in a busy subway train, or you are being intimate with them, or you want to communicate," he says. "I think it is good to be close to someone when you are exchanging information."

RedTacton transceivers can be treated as standard network devices, so software running over Ethernet or other TCP/IP protocol-based networks will run unmodified.

Gordon Bell, a senior researcher at Microsoft's Bay Area Research Center in San Francisco, says that while Bluetooth or other radio technologies may be perfectly suitable to link gadgets for many personal area networking purposes, there are certain applications for which RedTacton technology would be ideal.

"I recently acquired my own in-body device -- a pacemaker -- but it takes a special radio frequency connector to interface to it. As more and more implants go into bodies, the need for a good Internet Protocol connection increases," he says.

In the near future, the most important application for body-based networking may well be for communications within, rather than on the surface of, or outside, the body.

An intriguing possibility is that the technology will be used as a sort of secondary nervous system to link large numbers of tiny implanted components placed beneath the skin to create powerful onboard -- or in-body -- computers.

References

http://www.mobileinfo.com

http://www.infoworld.com

http://www.ericsson.com

http://www.nist.gov

http://accessscience.com

http://searchtelecom.techtarget.com

http://en.wikipedia.org

if any problem & question please give coment